Why we should be cautious about relying on AI for cancer diagnosis

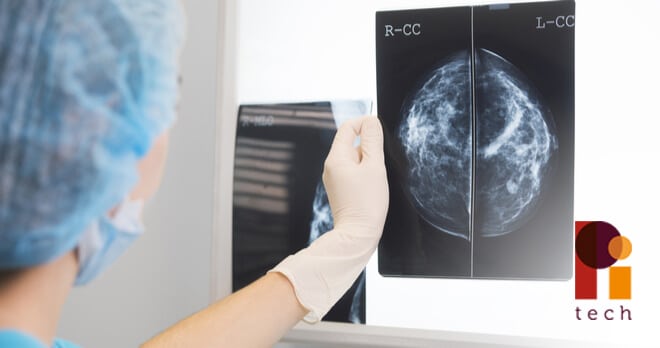

As you may have read last month, researchers from Google and medical centres in both the United States and Britain have been working on an algorithm to help doctors more accurately identify breast cancer on mammograms. Whilst the new system is not yet available within the medical sector, the idea is to write a program that recognises patterns consistent with cancer to assist doctors in interpreting the images obtained from scans.

The algorithm has been tested on breast images where the diagnosis was already known and in studies in America it produced a 9.4% reduction in false negatives, i.e. those where a radiologist has reported the mammogram as normal when in fact cancer is present. It also provided a 5.7% reduction in false positives where a radiologist had incorrectly reported a scan as abnormal but where there is in fact no cancer.

Comparatively, in Britain the system reduced false negatives by 2.7% and false positives by 1.2%.

Whilst it seems a very positive step for AI to be used to help diagnose cancer, is there a dark side to AI diagnosis? Many healthcare professionals have expressed concern about reliance on this type of technology.

Healthcare providers have commented that, whilst an algorithm may be able to interpret imagery, the AI cannot replicate the subtle, judgment based skills of nurses and doctors and it is possible that this use of technology could acerbate existing problems in relation to over-diagnosis of cancers.

Why is over-diagnosis of cancer a problem?

Many of us would consider it a positive step to have cancer diagnosed much earlier. It is widely understood that early intervention and treatment will increase our chance of survival, so we often do not consider instances either where an incorrect diagnosis is made or where a cancer discovered is not actually going to significantly impact on someone’s life.

Once something has been diagnosed as cancer though, whether correctly or incorrectly, it brings about a chain of medical intervention which can be life-changing and difficult to stop. This is the case even if it is known that an error may have been made or the treatment may have been unnecessary.

As an example, I recently acted in a case for an individual who was incorrectly diagnosed with cancer and, as a result, ended up having his entire lung removed. This all happened before medical staff determined that he in fact didn’t have lung cancer at all, and was instead suffering from an abscess which would, most likely, have resolved with the administering of a high dose broad spectrum antibiotic. Surgery would have been an unlikely option in this case.

So as a medical negligence solicitor I am extremely concerned that any over-diagnosis of cancer through potential false positives could have severe and damaging impact on an individual’s life.

Right now the algorithm is in its very early stages and, as such, requires a lot more testing before it is ready to be used in a medical context. I know that I for one will be watching with great interest to see whether this step forward will be as positive as Google suggests. However what’s clear is that AI will not replace medically trained staff any time soon, and with good reason.